Knowledge base articles are the cornerstone of effective customer self-service, providing customers with instant access to answers for questions that are likely to crop up in their minds.

Well-crafted articles not only empower customers to solve problems independently but also streamline support operations by reducing the volume of direct inquiries.

However, the clarity and conciseness of these articles are crucial. They must be easily understandable, precisely addressing the user's concerns without overwhelming them with excessive information.

In this post, we’ll outline some essential tips for creating clear and concise knowledge base content that enhances user experience and fosters customer autonomy.

1. Focus on Audience Understanding

Before you start writing your knowledge base articles, identify who will be reading them. The content should be tailored to the specific needs, understanding levels, and search behaviors of your target audience, which might include customers, employees, or tech support teams.

Here’s how you can align your content with the audience:

- Audience Identification: Determine whether your readers are beginners, intermediates, or experts in the subject matter. This understanding will guide the depth of detail and the complexity of the language you should use. For example, technical support articles might need to dive deeper into subject matter expertise than those aimed at general customers.

- Addressing User Intent: Consider what the reader is likely to be looking for when they consult a knowledge base article. Are they trying to solve a problem, looking for specific information, or trying to understand how to use a product more effectively? Each scenario might require a different approach in terms of content structure and highlights.

- Content Customization: Customize your content to meet user needs efficiently. For instance, if your knowledge base is customer-focused, the articles should help them resolve issues quickly without technical jargon that could confuse them. If the articles are for internal use, they might be more technical, focusing on troubleshooting deeper issues or explaining processes in detail.

By clearly understanding and defining your audience, you can create knowledge base articles that are not only informative but also directly relevant and useful to the reader’s specific needs.

2. Write Clear Titles

The title of a knowledge base article plays a pivotal role in how effectively the information reaches the user. A well-crafted title ensures that the article is easy to find and immediately understandable, setting the stage for the content that follows.

Here’s how to craft effective titles:

- Clarity and Relevance: The title should clearly reflect the content of the article. Use straightforward language that your audience would naturally type into a search bar. For example, instead of a creative but vague title like "Conquering Connectivity Issues," opt for a more direct and searchable phrase like "How to Troubleshoot Wi-Fi Connection Problems."

- Use of Keywords: Incorporate relevant keywords that users are likely to search for. This helps improve the searchability of the article not only within the knowledge base but also in external search engines. For instance, if the article is about resetting a password, the title should straightforwardly include "reset" and "password."

- Action-Oriented Language: Starting titles with verbs can guide users towards solutions effectively. Titles like "Setting Up Your Email Account" or "Exporting Data from XYZ Software" are direct and help the user understand immediately what the article will help them accomplish.

- Brevity: Keep the title concise yet descriptive. A lengthy title can be cumbersome and may deter readers. Aim for a balance where the title is short enough to grasp at a glance but descriptive enough to be informative.

By focusing on these elements, your knowledge base article titles will be optimized to catch the attention of those who need them, providing a clear indication of the content that follows and ensuring users can find quick solutions to their queries.

3. Ensure Proper Structure and Format

A well-organized article makes it easier for users to find the information they need and follow instructions accurately.

Here is how to structure your content for maximum impact:

- Logical Flow: Organize your article in a logical sequence that naturally progresses from introduction to conclusion. Start with a brief overview or summary that informs the reader about what the article will cover. This helps set expectations and provides a roadmap of the content.

- Use of Headings and Subheadings: Divide your article into sections with clear headings and subheadings (H2s, H3s, etc.). This not only breaks the content into manageable chunks but also helps in navigating through the article. For example, headings like “Introduction,” “Step-by-Step Guide,” and “Troubleshooting” clearly delineate different parts of the article.

- Bullet Points and Numbered Lists: When outlining steps, requirements, or key points, use bullet points and numbered lists. This format is easier to scan and follow, especially for users who might be trying to resolve an issue and need quick and clear instructions. For instance, a troubleshooting guide could list steps as numbered items to ensure the user performs them in the correct order.

- Consistency: Maintain a consistent format throughout your knowledge base. This includes consistent terminology, style, and layout. Consistency helps in reducing confusion and makes your articles more professional and easier to understand.

- Summary and Conclusion: End each article with a summary or conclusion that recaps the main points covered. This reinforces the information and ensures that the key messages are communicated effectively. If applicable, include the next steps or link to further resources.

By carefully structuring your knowledge base articles, you ensure that they are not only informative but also user-friendly, making it easier for your audience to find and apply the information they need effectively.

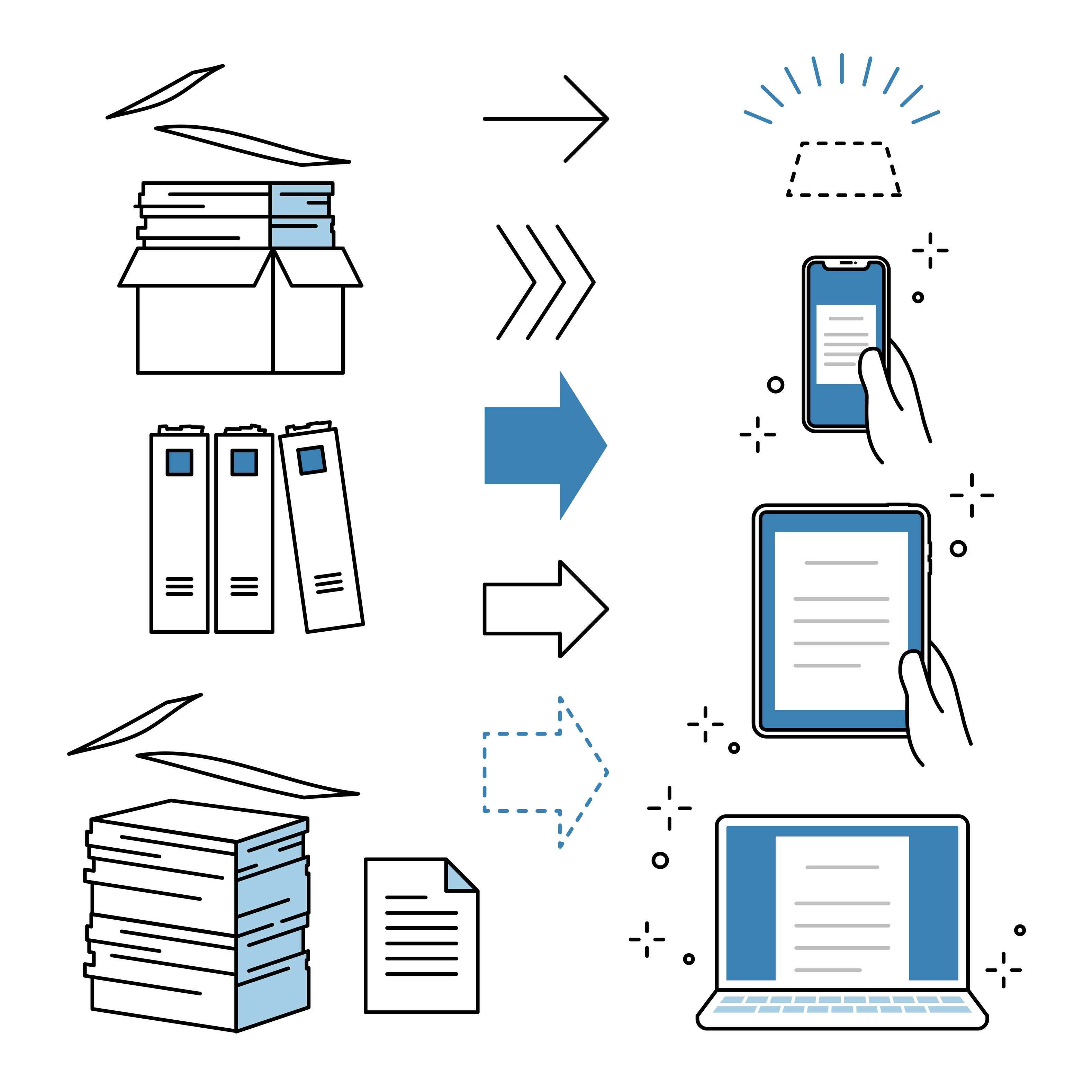

4. Include Visual Aids

Visual aids enhance the comprehension and engagement of your knowledge base articles. Properly selected and integrated visuals can break down complex information, illustrate steps more clearly, and make the content more appealing.

Here's how to effectively use visuals in your knowledge base articles:

- Relevance and Support: Choose images, screenshots, and videos that are directly relevant to the content. For instance, if the article is about setting up a software application, include screenshots of each step. This not only makes the instructions clearer but also helps users to visually verify that they are following the steps correctly.

- Quality and Clarity: Ensure that all visual elements are of high quality. Blurry or poorly cropped images can detract from the user experience. Visuals should be clear enough to be easily understood at a glance. For videos, ensure that they are well-lit and the audio is clear, as these factors significantly affect their instructional value.

- Annotations and Highlights: Use annotations, such as arrows, circles, or labels, to draw attention to the most important parts of an image or screenshot. For videos, consider using on-screen text or highlights to point out key features or steps, especially during critical parts of the demonstration.

- Accessibility: Include alternative text (alt text) for images and captions or transcripts for videos. This not only helps users who rely on screen readers but also enhances the SEO of your articles. Alt text should describe the visual in a way that conveys its purpose within the article.

- Consistency: Maintain a consistent style and format for all visuals across your knowledge base. This consistency in visual design contributes to a cohesive user experience and reinforces your brand identity.

By integrating these types of visuals thoughtfully, your knowledge base articles become more practical and user-friendly. Visual aids not only improve the user's ability to understand and follow the instructions but also enhance the overall look and feel of your knowledge base, making it a more inviting resource for self-service.

5. Maintain a Simple Language and Tone

The effectiveness of a knowledge base article largely depends on the clarity and simplicity of its language. A well-written article should be easily understandable by anyone who reads it, regardless of their technical expertise.

Here is how you can ensure your writing style and language are appropriate:

- Simplicity is Key: Use simple, direct language that is easy to understand. Avoid jargon, technical terms, or complex vocabulary that might confuse the reader. For instance, instead of saying "initiate," you can use "start," and instead of "terminate," use "stop." This makes the content more accessible to a broader audience.

- Active Voice: Write in an active voice as much as possible. Active voice makes your sentences clearer and more engaging. For example, instead of writing "The document can be saved by clicking the save button," you should write "Click the save button to save the document."

- Targeted at Specific Roles: Tailor your language to suit the specific function or department that relies on the knowledge base. For example, if writing articles that support sales teams, use terms like "revenue enablement" to directly connect the content with their daily activities and goals.

- Conciseness: Be concise in your writing. Avoid overly long sentences and paragraphs that could make the content harder to follow. Each sentence should contribute to your argument or explanation without redundancy.

- Consistency: Consistency in terms plays a crucial role in reducing confusion. Stick to one term for a concept throughout the article. For example, if you choose to use the word "folder" instead of "directory," maintain that choice throughout the document.

- Use of Bullet Points and Lists: Where applicable, use bullet points and numbered lists to break down information into easily digestible pieces. This not only helps in making the content skimmable but also aids in the reader's understanding by clearly distinguishing steps or key points.

- Empathetic Tone: While the tone should be professional, it should also be empathetic. Acknowledge the reader’s frustrations and offer reassurance. Phrases like "This process may take a few minutes, but you can use this time to..." can make the instructions more relatable and less daunting.

By adhering to these writing principles, you can create knowledge base articles that are not only informative but also pleasant and easy to read, ensuring that users feel supported and proficient in managing their queries or issues.

6. Incorporate Internal Linking and Navigation

Effective navigation ensures that users can find the information they need quickly and without frustration. Well-thought-out navigation aids (discussed below) not only improve user experience but also increase the efficiency of information retrieval.

Here's how to enhance navigation in your knowledge base:

- Internal Linking: Use internal links wisely to connect various articles within your knowledge base. This helps users easily access related topics without having to search for them separately. For example, in an article about setting up marketing reporting, include links to related articles like troubleshooting data integration issues or exporting reports to clients.

- Search Functionality: Incorporate a robust search feature that allows users to enter keywords and quickly find relevant articles. Ensure that the search engine can handle variations in phrasing and common misspellings to improve the chances of users finding what they need on their first try.

- Breadcrumbs and Navigation Bars: Utilize breadcrumbs and navigation bars to show users their current location within the knowledge base. This is particularly useful in complex knowledge bases with multiple categories and subcategories, as it helps users track their path back to broader topics or sections.

- Categorization: Organize articles into clear, logical categories and subcategories. For instance, separate articles related to account management, technical troubleshooting, and usage tips. This categorization should reflect the way users think about and segment the information related to your products or services.

- Use of Tags and Filters: Tags and filters can enhance the discoverability of articles by allowing users to sort content based on specific features, such as the date of publication, relevance, or topic. This functionality is particularly useful in larger knowledge bases where the volume of articles can be overwhelming.

- Table of Contents: For longer articles, include a table of contents with hyperlinked section titles at the beginning of the article. This allows users to quickly jump to the section that is most relevant to their needs, enhancing their experience by saving time and effort.

By improving the navigational tools within your knowledge base, you make it easier for users to find the right information at the right time, thereby enhancing their overall experience and satisfaction with your support resources.

7. Maintain and Update the Content

To ensure that your knowledge base remains a valuable resource, it is critical to keep the content up-to-date and relevant. Regular maintenance and updates reflect changes in your products, services, and customer needs.

Here’s how to effectively maintain your knowledge base articles:

- Regular Reviews: Establish a schedule for regularly reviewing knowledge base articles. This routine check ensures that all information is current, accurate, and reflects the latest product updates or service changes. For instance, if you release a new software update, review related articles to incorporate new features or changes in operation.

- Feedback Mechanisms: Implement feedback mechanisms such as comments, ratings, or direct feedback forms at the end of each article. This allows users to report errors, suggest improvements, or express satisfaction. Pay attention to this feedback as it is a direct line to your users' needs and experiences.

- Analytics: Use analytics to track the usage of your knowledge base articles. Look for patterns such as high-traffic articles, articles with high exit rates, or those that are seldom read. These metrics can guide you in identifying which articles need more attention or updating to better serve your users.

- Version Control: Keep track of changes to each article. When updates are made, use a version control system to log what was changed, why, and by whom. This not only helps in maintaining the history of an article but also in reverting back to previous versions if needed.

- Collaborative Updates: Encourage collaboration among team members who are subject matter experts to ensure that the content is not only accurate but also comprehensive. This collaborative approach helps in pooling diverse knowledge and perspectives, enhancing the quality and reliability of your knowledge base.

- Consistency Check: As you update articles, ensure that changes are consistent across all related content. Consistency in terminology, style, and presentation across articles helps maintain a professional and coherent user experience.

By continuously monitoring, updating, and refining your knowledge base, you ensure that it remains a reliable and effective tool for users seeking assistance.

8. Optimize for Search Engines

Optimizing your knowledge base articles for search, both internally within the knowledge base and externally via search engines, is crucial for ensuring that users can find the help they need quickly and easily.

Here are some essential tips for enhancing the searchability of your knowledge base content:

- Keyword Integration: Identify the keywords and phrases that users are most likely to search for when looking for information related to your articles. Incorporate these keywords naturally throughout the text, especially in titles, headings, and the first few sentences of the content. This helps improve the visibility of your articles in search results.

- SEO Best Practices: Apply general SEO principles to your knowledge base articles. This includes using meta descriptions, alt text for images, and proper URL structures. Meta descriptions should succinctly summarize the article's content, using relevant keywords that improve search rankings.

- Rich Snippets and Structured Data: Utilize structured data markup (such as Schema.org) to help search engines understand the content of your articles better. This can also enable rich snippets in search results, which can make your articles more attractive and clickable when they appear in Google search results.

- Mobile Optimization: Ensure that your knowledge base is mobile-friendly. With the increasing use of mobile devices to access information, having a responsive design that works well on smartphones and tablets is essential. This also affects your articles' rankings in search engines, as mobile-friendliness is a ranking factor.

- Internal Linking Structure: Develop a robust internal linking structure within your knowledge base. Linking articles to each other not only helps users navigate related topics easily but also allows search engines to crawl and index your content more effectively. Ensure that the anchor text used for links is descriptive and relevant to the linked article.

- Regular Content Audits: Periodically audit your knowledge base content to ensure that all articles are optimized for search. This includes checking for broken links, outdated content, and opportunities to improve SEO through better keyword usage or updated information.

By focusing on these optimization strategies, you can greatly enhance the accessibility and visibility of your knowledge base articles, making it easier for users to find the information they need through search engines and within your own site.

Wrapping Up

Crafting clear and concise knowledge base articles is fundamental to enhancing the customer experience and empowering users to solve problems independently.

By adhering to the strategies outlined in this article — from understanding your audience and crafting effective titles to structuring your content and optimizing for search — you can create a genuinely valuable knowledge base that not only resolves issues but also enriches users' appreciation of your business.

________________________________________________

.png)

.png)

.png)

.png)